텐서플로우 모델을 작성하는 세가지 방법

Tensorflow 모델 작성법

- Sequential

- Functional

- Subclassing

Sequential 사용법

import tensorflow as tf

# type 1

model = tf.keras.Sequntial()

model.add(tf.keras.layers.__layer-name__(__params__))

model.add(tf.keras.layers.__layer-name__(__params__))

model.add(tf.keras.layers.__layer-name__(__params__))

# type 2

model = tf.keras.Sequential([

tf.keras.layers.__layer-name__(__params__),

tf.keras.layers.__layer-name__(__params__),

tf.keras.layers.__layer-name__(__params__)

])

Sequential 모델은 하나의 입력 - 하나의 출력이 아닐 경우 적절하지 않습니다.

Functional 사용법

import tensorflow as tf

# params

inputs = tf.keras.Input(shape = (__input-shape__))

x = tf.keras.layers.__layer-name__(__params__)(input)

x = tf.keras.layers.__layer-name__(__params__)(x)

outputs = tf.keras.layers.__layer-name__(__params__)(x)

# build a model

model = tf.keras.Model(inputs = inputs, outputs = outputs)

Functional 모델은 input과 output을 정의하여 생성합니다.

다중 입출력이 가능합니다.

Subclassing 사용법

import tensorflow as tf

class MyModel(tf.keras.Model):

def __init__(self):

super(MyModel, self).__init__()

self.__mylayer__ = tf.keras.layers.__layer-name__(__params__)

self.__mylayer__ = tf.keras.layers.__layer-name__(__params__)

self.__mylayer__ = tf.keras.layers.__layer-name__(__params__)

def call(self, x):

x = self.__mylayer__(x)

x = self.__mylayer__(x)

x = self.__mylayer__(x)

return x

모델 재사용이 용이하고, 다중 입출력이 가능합니다.

실습 1. MNIST

MNIST 데이터는 숫자 손글씨 이미지와 0부터 9까지의 숫자로 이루어져 있습니다.

이미지를 받아서 0부터 9까지의 숫자 중 하나로 분류하는 모델을 만들어보겠습니다.

Tensorflow, Datasets, MNIST

요구사항

- Data

- 데이터 로드

- 데이터 정규화 (0~255 → 0~1)

- 데이터 차원 확장 (newaxis)

- Model

- 필터가 32개, 커널 사이즈가 3이며 relu를 사용하는 Conv2D 레이어

- 필터가 64개, 커널 사이즈가 3이며 relu를 사용하는 Conv2D 레이어

- 1차원 변환

- 출력 노드가 128개이며 relu를 사용하는 fully-connected 레이어

- 출력값이 10개(0부터 9까지의 숫자)이며 softmax를 사용하는 fully-connected 레이어

- Train

- 모델 컴파일 (최적화 함수 adam, 손실함수 cross entropy)

- 모델 피팅 (학습 횟수 5회)

- 모델 평가

Conv2D 레이어의 필터는 커널 채널의 개수를 말합니다. 커널의 사이즈란 커널 채널의 크기를 말합니다.

필터가 32개, 커널 사이즈가 3인 Conv2D 레이어란 아래 이미지와 같은 3x3 크기의 커널 채널을 32개 만들겠다는 의미입니다.

이미지와 같이 하나의 커널은 한번에 커널 크기만큼의 이미지 픽셀 값을 얻으며, 이미지의 모든 값을 얻을 때까지 반복합니다.

Sequential

import tensorflow as tf

import numpy as np

# data

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255, x_test / 255 # rescaling

x_train = x_train[..., np.newaxis]

x_test = x_test[..., np.newaxis]

# build a model (5 layers)

model = tf.keras.Sequential([

# 1. filter(kernel channel) = 32, kernel = 3, relu, conv2d layer

tf.keras.layers.Conv2D(32, 3, activation='relu'),

# 2. filter = 64, kernel = 3, relu, conv2d layer

tf.keras.layers.Conv2D(64, 3, activation='relu'),

# 3. flatten layer

tf.keras.layers.Flatten(),

# 4. output = 128 nodes, relu, fully-connected dense layer

tf.keras.layers.Dense(128, activation='relu'),

# 5. ouput = class (data), relu, fully-connected dense layer

tf.keras.layers.Dense(10, activation='softmax')

])

# compile model

model.compile(optimizer = 'adam', loss = 'sparse_categorical_crossentropy', metrics = ['accuracy'])

# adjust model

model.fit(x_train, y_train, epochs = 5)

model.evaluate(x_test, y_test, verbose = 2)

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/mnist.npz

11493376/11490434 [==============================] - 0s 0us/step

11501568/11490434 [==============================] - 0s 0us/step

Epoch 1/5

1875/1875 [==============================] - 192s 102ms/step - loss: 0.1094 - accuracy: 0.9668

Epoch 2/5

1875/1875 [==============================] - 189s 101ms/step - loss: 0.0349 - accuracy: 0.9891

Epoch 3/5

1875/1875 [==============================] - 191s 102ms/step - loss: 0.0201 - accuracy: 0.9935

Epoch 4/5

1875/1875 [==============================] - 190s 102ms/step - loss: 0.0124 - accuracy: 0.9961

Epoch 5/5

1875/1875 [==============================] - 191s 102ms/step - loss: 0.0092 - accuracy: 0.9971

313/313 - 7s - loss: 0.0486 - accuracy: 0.9877 - 7s/epoch - 22ms/step

[0.048569709062576294, 0.9876999855041504]

Functional

import tensorflow as tf

import numpy as np

# data

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255, x_test / 255 # rescaling

x_train = x_train[..., np.newaxis]

x_test = x_test[..., np.newaxis]

# input layer

inputs = tf.keras.Input(shape = (28, 28, 1))

# 1. filter(kernel channel) = 32, kernel = 3, relu, conv2d layer

x = tf.keras.layers.Conv2D(32, 3, activation = 'relu')(inputs)

# 2. filter = 64, kernel = 3, relu, conv2d layer

x = tf.keras.layers.Conv2D(64, 3, activation = 'relu')(x)

# 3. flatten layer

x = tf.keras.layers.Flatten()(x)

# 4. output = 128 nodes, relu, fully-connected dense layer

x = tf.keras.layers.Dense(128, activation = 'relu')(x)

# 5. ouput = class (data), relu, fully-connected dense layer

outputs = tf.keras.layers.Dense(10, activation = 'softmax')(x)

# build a model (5 layers)

model = tf.keras.Model(inputs = inputs, outputs = outputs)

# compile model

model.compile(optimizer = 'adam', loss = 'sparse_categorical_crossentropy', metrics = ['accuracy'])

# adjust model

model.fit(x_train, y_train, epochs = 5)

model.evaluate(x_test, y_test, verbose = 2)

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/mnist.npz

11493376/11490434 [==============================] - 0s 0us/step

11501568/11490434 [==============================] - 0s 0us/step

Epoch 1/5

1875/1875 [==============================] - 190s 101ms/step - loss: 0.1029 - accuracy: 0.9681

Epoch 2/5

1875/1875 [==============================] - 184s 98ms/step - loss: 0.0329 - accuracy: 0.9895

Epoch 3/5

1875/1875 [==============================] - 184s 98ms/step - loss: 0.0192 - accuracy: 0.9938

Epoch 4/5

1875/1875 [==============================] - 187s 100ms/step - loss: 0.0120 - accuracy: 0.9960

Epoch 5/5

1875/1875 [==============================] - 189s 101ms/step - loss: 0.0090 - accuracy: 0.9968

313/313 - 6s - loss: 0.0423 - accuracy: 0.9890 - 6s/epoch - 21ms/step

[0.04232006147503853, 0.9890000224113464]

Subclassing

import tensorflow as tf

import numpy as np

# data

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255, x_test / 255 # rescaling

x_train = x_train[..., np.newaxis]

x_test = x_test[..., np.newaxis]

# build a model (5 layers)

class MNISTModel(tf.keras.Model):

def __init__(self):

super(MNISTModel, self).__init__()

# 1. filter(kernel channel) = 32, kernel = 3, relu, conv2d layer

self.Conv2D1 = tf.keras.layers.Conv2D(32, 3, activation = 'relu')

# 2. filter = 64, kernel = 3, relu, conv2d layer

self.Conv2D2 = tf.keras.layers.Conv2D(64, 3, activation = 'relu')

# 3. flatten layer

self.Flatten = tf.keras.layers.Flatten()

# 4. output = 128 nodes, relu, fully-connected dense layer

self.Dense1 = tf.keras.layers.Dense(128, activation = 'relu')

# 5. ouput = class (data), relu, fully-connected dense layer

self.Dense2 = tf.keras.layers.Dense(10, activation = 'softmax')

def call(self, x):

x = self.Conv2D1(x)

x = self.Conv2D2(x)

x = self.Flatten(x)

x = self.Dense1(x)

x = self.Dense2(x)

return x

model = MNISTModel()

# compile model

model.compile(optimizer = 'adam', loss = 'sparse_categorical_crossentropy', metrics = ['accuracy'])

# adjust model

model.fit(x_train, y_train, epochs = 5)

model.evaluate(x_test, y_test, verbose = 2)

Epoch 1/5

1875/1875 [==============================] - 188s 100ms/step - loss: 0.1079 - accuracy: 0.9676

Epoch 2/5

1875/1875 [==============================] - 183s 98ms/step - loss: 0.0347 - accuracy: 0.9894

Epoch 3/5

1875/1875 [==============================] - 182s 97ms/step - loss: 0.0208 - accuracy: 0.9932

Epoch 4/5

1875/1875 [==============================] - 182s 97ms/step - loss: 0.0115 - accuracy: 0.9965

Epoch 5/5

1875/1875 [==============================] - 182s 97ms/step - loss: 0.0101 - accuracy: 0.9966

313/313 - 6s - loss: 0.0486 - accuracy: 0.9878 - 6s/epoch - 21ms/step

[0.04858790710568428, 0.9878000020980835]

실습 2. CIFAR - 100

CIFAR 데이터는 이미지와 그 이미지에 해당하는 100개의 클래스로 이루어져 있습니다.

이미지와 클래스는 다음과 같습니다.

| Superclass | Classes |

| aquatic mammals | beaver, dolphin, otter, seal, whale |

| fish | aquarium fish, flatfish, ray, shark, trout |

| flowers | orchids, poppies, roses, sunflowers, tulips |

| food containers | bottles, bowls, cans, cups, plates |

| fruit and vegetables | apples, mushrooms, oranges, pears, sweet peppers |

| household electrical devices | clock, computer keyboard, lamp, telephone, television |

| household furniture | bed, chair, couch, table, wardrobe |

| insects | bee, beetle, butterfly, caterpillar, cockroach |

| large carnivores | bear, leopard, lion, tiger, wolf |

| large man-made outdoor things | bridge, castle, house, road, skyscraper |

| large natural outdoor scenes | cloud, forest, mountain, plain, sea |

| large omnivores and herbivores | camel, cattle, chimpanzee, elephant, kangaroo |

| medium-sized mammals | fox, porcupine, possum, raccoon, skunk |

| non-insect invertebrates | crab, lobster, snail, spider, worm |

| people | baby, boy, girl, man, woman |

| reptiles | crocodile, dinosaur, lizard, snake, turtle |

| small mammals | hamster, mouse, rabbit, shrew, squirrel |

| trees | maple, oak, palm, pine, willow |

| vehicles 1 | bicycle, bus, motorcycle, pickup truck, train |

| vehicles 2 | lawn-mower, rocket, streetcar, tank, tractor |

다양한 이미지로부터 클래스를 분류하는 모델을 작성하겠습니다.

Tensorflow, Datasets, CIFAR-100

요구사항

- Data

- 데이터 로드

- 데이터 정규화 (0~255 → 0~1)

- Model

- 필터가 16개, 커널 사이즈가 3이며 relu를 사용하는 Conv2D 레이어

- Max-Pooling 레이어

- 필터가 32개, 커널 사이즈가 3이며 relu를 사용하는 Conv2D 레이어

- Max-Pooling 레이어

- 1차원 변환

- 출력 노드가 256개이며 relu를 사용하는 fully-connected 레이어

- 출력값이 100개(100개의 클래스)이며 softmax를 사용하는 fully-connected 레이어

- Train

- 최적화 함수 adam, 손실 함수 크로스엔트로피를 사용하여 모델 컴파일

- Early Stopping 기능

- 모델 피팅 (학습 횟수 5회)

- 모델 평가

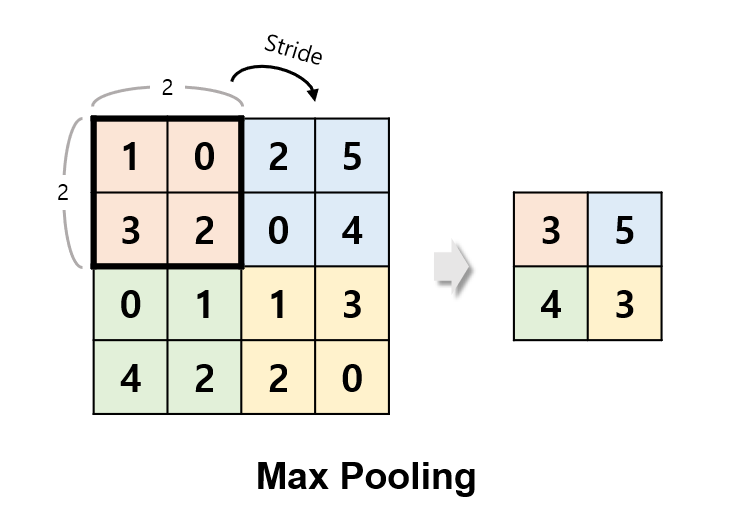

MNIST와 다르게 Max-Pooling 레이어와 Early Stopping 기능을 추가했습니다.

Max-Pooling 레이어를 통해 Conv2D 레이어로부터 얻은 정보를 가장 큰 값을 기준으로 압축합니다.

또한, 만약 loss가 2회 이상 증가할 경우 학습을 조기 종료하는 early stopping 기능을 도입하겠습니다.

Sequential

import tensorflow as tf

# data

cifar100 = tf.keras.datasets.cifar100

(x_train, y_train), (x_test, y_test) = cifar100.load_data()

x_train, x_test = x_train / 255, x_test / 255 # rescaling

# build a model

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(16, 3, activation = 'relu'),

tf.keras.layers.MaxPool2D(),

tf.keras.layers.Conv2D(32, 3, activation = 'relu'),

tf.keras.layers.MaxPool2D(),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(256, activation = 'relu'),

tf.keras.layers.Dense(100, activation = 'softmax')

])

# compile model

model.compile(optimizer = 'adam',

loss = 'sparse_categorical_crossentropy',

metrics = ['accuracy'])

# adjust model

es = tf.keras.callbacks.EarlyStopping(monitor='loss', patience=2)

model.fit(x_train, y_train, callbacks=[es], epochs=150)

model.evaluate(x_test, y_test, verbose = 2)

output

Epoch 1/150

1563/1563 [==============================] - 38s 24ms/step - loss: 3.6278 - accuracy: 0.1566

Epoch 2/150

1563/1563 [==============================] - 38s 24ms/step - loss: 2.9267 - accuracy: 0.2807

Epoch 3/150

1563/1563 [==============================] - 38s 24ms/step - loss: 2.6397 - accuracy: 0.3353

Epoch 4/150

1563/1563 [==============================] - 38s 24ms/step - loss: 2.4326 - accuracy: 0.3781

Epoch 5/150

1563/1563 [==============================] - 38s 24ms/step - loss: 2.2655 - accuracy: 0.4156

...

Epoch 44/150

1563/1563 [==============================] - 38s 24ms/step - loss: 0.2654 - accuracy: 0.9101

Epoch 45/150

1563/1563 [==============================] - 38s 24ms/step - loss: 0.2781 - accuracy: 0.9080

Epoch 46/150

1563/1563 [==============================] - 37s 24ms/step - loss: 0.2483 - accuracy: 0.9175

Epoch 47/150

1563/1563 [==============================] - 37s 24ms/step - loss: 0.2706 - accuracy: 0.9107

Epoch 48/150

1563/1563 [==============================] - 38s 24ms/step - loss: 0.2562 - accuracy: 0.9151

313/313 - 2s - loss: 9.7880 - accuracy: 0.3069 - 2s/epoch - 7ms/step

[9.788019180297852, 0.3068999946117401]

Functional

import tensorflow as tf

# data

cifar100 = tf.keras.datasets.cifar100

(x_train, y_train), (x_test, y_test) = cifar100.load_data()

x_train, x_test = x_train / 255, x_test / 255 # rescaling

# build a model

inputs = tf.keras.Input(shape = (32, 32, 3))

x = tf.keras.layers.Conv2D(16, 3, activation = 'relu')(inputs)

x = tf.keras.layers.MaxPool2D()(x)

x = tf.keras.layers.Conv2D(32, 3, activation = 'relu')(x)

x = tf.keras.layers.MaxPool2D()(x)

x = tf.keras.layers.Flatten()(x)

x = tf.keras.layers.Dense(256, activation = 'relu')(x)

outputs = tf.keras.layers.Dense(100, activation = 'softmax')(x)

model = tf.keras.Model(inputs = inputs, outputs = outputs)

# compile model

model.compile(optimizer = 'adam',

loss = 'sparse_categorical_crossentropy',

metrics = ['accuracy'])

# adjust model

es = tf.keras.callbacks.EarlyStopping(monitor='loss', patience=2)

model.fit(x_train, y_train, callbacks=[es], epochs=150)

model.evaluate(x_test, y_test, verbose = 2)

output

Epoch 1/150

1563/1563 [==============================] - 37s 24ms/step - loss: 3.6107 - accuracy: 0.1575

Epoch 2/150

1563/1563 [==============================] - 37s 23ms/step - loss: 2.8948 - accuracy: 0.2852

Epoch 3/150

1563/1563 [==============================] - 39s 25ms/step - loss: 2.5891 - accuracy: 0.3485

Epoch 4/150

1563/1563 [==============================] - 37s 24ms/step - loss: 2.3797 - accuracy: 0.3930

Epoch 5/150

1563/1563 [==============================] - 37s 23ms/step - loss: 2.2071 - accuracy: 0.4282

...

Epoch 48/150

1563/1563 [==============================] - 38s 24ms/step - loss: 0.2153 - accuracy: 0.9310

Epoch 49/150

1563/1563 [==============================] - 37s 24ms/step - loss: 0.2182 - accuracy: 0.9276

Epoch 50/150

1563/1563 [==============================] - 37s 24ms/step - loss: 0.1978 - accuracy: 0.9357

Epoch 51/150

1563/1563 [==============================] - 37s 24ms/step - loss: 0.2118 - accuracy: 0.9305

Epoch 52/150

1563/1563 [==============================] - 37s 24ms/step - loss: 0.2124 - accuracy: 0.9323

313/313 - 2s - loss: 11.0868 - accuracy: 0.2987 - 2s/epoch - 7ms/step

[11.086834907531738, 0.2987000048160553]

Subclassing

import tensorflow as tf

# data

cifar100 = tf.keras.datasets.cifar100

(x_train, y_train), (x_test, y_test) = cifar100.load_data()

x_train, x_test = x_train / 255, x_test / 255 # rescaling

# build a model

class CIFARModel(tf.keras.Model):

def __init__(self):

super(CIFARModel, self).__init__()

self.Conv2D1 = tf.keras.layers.Conv2D(16, 3, activation = 'relu')

self.Conv2D2 = tf.keras.layers.Conv2D(32, 3, activation = 'relu')

self.MaxPool2D = tf.keras.layers.MaxPool2D()

self.Flatten = tf.keras.layers.Flatten()

self.Dense1 = tf.keras.layers.Dense(256, activation = 'relu')

self.Dense2 = tf.keras.layers.Dense(100, activation = 'softmax')

def call(self, x):

x = self.Conv2D1(x)

x = self.MaxPool2D(x)

x = self.Conv2D2(x)

x = self.MaxPool2D(x)

x = self.Flatten(x)

x = self.Dense1(x)

x = self.Dense2(x)

return x

model = CIFARModel()

# compile model

model.compile(optimizer = 'adam',

loss = 'sparse_categorical_crossentropy',

metrics = ['accuracy'])

# adjust model

es = tf.keras.callbacks.EarlyStopping(monitor='loss', patience=2)

model.fit(x_train, y_train, callbacks=[es], epochs=150)

model.evaluate(x_test, y_test, verbose = 2)

output

Epoch 1/150

1563/1563 [==============================] - 40s 25ms/step - loss: 3.6529 - accuracy: 0.1501

Epoch 2/150

1563/1563 [==============================] - 37s 23ms/step - loss: 2.9314 - accuracy: 0.2802

Epoch 3/150

1563/1563 [==============================] - 37s 23ms/step - loss: 2.6212 - accuracy: 0.3408

Epoch 4/150

1563/1563 [==============================] - 37s 23ms/step - loss: 2.4196 - accuracy: 0.3812

Epoch 5/150

1563/1563 [==============================] - 37s 23ms/step - loss: 2.2583 - accuracy: 0.4156

...

Epoch 50/150

1563/1563 [==============================] - 37s 23ms/step - loss: 0.2845 - accuracy: 0.9049

Epoch 51/150

1563/1563 [==============================] - 37s 24ms/step - loss: 0.2723 - accuracy: 0.9115

Epoch 52/150

1563/1563 [==============================] - 37s 24ms/step - loss: 0.2605 - accuracy: 0.9132

Epoch 53/150

1563/1563 [==============================] - 36s 23ms/step - loss: 0.2719 - accuracy: 0.9109

Epoch 54/150

1563/1563 [==============================] - 36s 23ms/step - loss: 0.2629 - accuracy: 0.9122

313/313 - 2s - loss: 10.7376 - accuracy: 0.2952 - 2s/epoch - 8ms/step

[10.737610816955566, 0.295199990272522]